Large language models (LLMs) are getting smarter by the week. They’re reasoning better, adapting faster and tackling more complex enterprise use cases.

But for all their power, they remain isolated. They can reason and generate, yet they don’t have safe, structured access to the data and systems that drive real business outcomes.

Model Context Protocol (MCP) is changing how LLM systems interact with enterprise data. It’s quickly becoming the standard for connecting AI agents to enterprise tools and data — securely, dynamically, and at scale.

Instead of building custom integrations for every LLM deployment, MCP gives you a standardized way for AI agents to discover and use enterprise systems. It’s the bridge between reasoning and real-world action — the foundation for the next generation of agentic AI.

Why we need MCP

We’re moving fast past simple chatbots toward autonomous AI systems — agents that can reason about problems and take autonomous actions.

Today’s AI models are powerful, but they have limited visibility into the enterprise context unless explicitly connected to it through integrations or retrieval systems. They can’t safely interact with your enterprise data without massive custom glue code. Each integration is one-off, fragile, and hard to maintain.

To make AI operationally useful, your agents need reliable, discoverable, and governed access to enterprise data. MCP provides the standardized layer that makes this possible.

What Is the Model Context Protocol (MCP)?

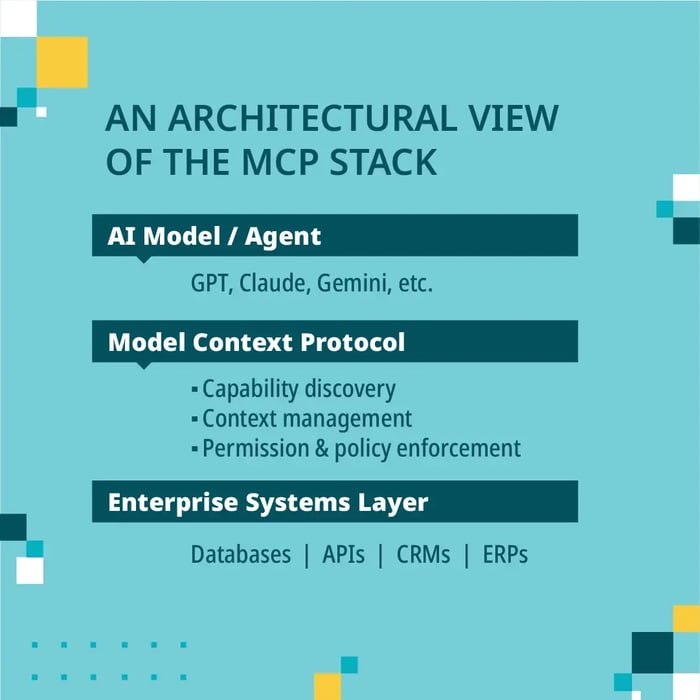

At its core, MCP is an open standard that defines how AI models and agents discover and interact with external tools, data sources, and systems.

It's a universal adapter that enables different applications to:

- Communicate

- Share information

- Cooperate

...without requiring a new custom integration for every use case.

Instead of writing hardcoded connectors each time you want your AI model to call a database, API, or file system, MCP defines a structured way for models to discover what’s available and how to use it— safely and dynamically.

Just as HTTP standardized how clients talk to servers, MCP standardizes how AI systems talk to enterprise data.

Traditional integrations vs. MCP interactions

Traditional approach:

- Write custom integration code for each AI use case

- Hard-code API endpoints and data transformations

- Maintain separate connectors for different models or vendors

- Models have no way to discover new capabilities dynamically

MCP approach:

- Models discover available tools via standardized metadata

- Capabilities can be registered, described, and invoked dynamically

- A single integration can support multiple models and agents

- Security and permissions can be built in at the protocol level

MCP reduces brittle, one-off API logic with a self-describing, governed integration layer. Your models can safely explore and use what’s available without you wiring up every endpoint manually.

How MCP architecture works

MCP uses a simple client-server model — but flipped for AI.

1. MCP client

Usually the AI system or agent framework that wraps your LLM.

It discovers tools, resources, and prompts through the protocol and invokes them as needed.

2. MCP server

Sits between your AI client and your enterprise systems.

It exposes structured capabilities such as tools, resources, and prompts, that the AI can query and use.

3. Data source

The actual backend systems such as databases, APIs, document stores, ERP systems, etc.

The MCP server bridges these to the model in a standardized, discoverable way.

How it differs from REST APIs

Traditional REST APIs are static. You must know the endpoints, parameters and authentication before calling them.

MCP flips that model.

The server advertises what it can do and what data and actions are available. The client (model) can discover those capabilities in real time and invoke them dynamically. The system behaves like introspection for APIs, designed specifically for AI agents.

Tools register themselves

When an MCP server starts up, it publishes descriptions of all available tools:

- What they do

- What parameters they expect

- What permissions are required

The AI agent can:

- Query what’s available

- Read descriptions to understand tools and data schemas

- Invoke them with structured parameters

- Receive predictable, machine-readable responses

This discovery-first design makes MCP perfect for agentic workflows where the AI can reason about which tools to use, in what order, and why.

Why MCP matters for engineering teams

Agentic AI is changing how we architect systems. Incorporating MCP now gives engineering teams a future-proof integration model for AI systems that act autonomously across enterprise environments.

Key advantages:

- Less integration plumbing: Each enterprise system runs its own MCP server. AI agents can discover and use multiple MCP servers simultaneously with no per-LLM connectors.

- Future-ready: As new AI models appear, they can immediately interact with your existing MCP layer meaning no need to rebuild integrations.

- Vendor-neutral: you’re not locked into any specific model or vendor SDK. MCP is an open standard.

- Scalable and secure: Governance, permissions, and auditability are built into the protocol.

- Reduced duplication: No more rewriting connection logic or data access patterns for each use case. The same MCP layer powers multiple agents, securely.

MCP acts as the middle layer that translates between the language of enterprise systems and the reasoning of AI models.

Examples of agentic workflows powered by MCP

1. Intelligent content generation

Your marketing team needs a product presentation, but the data is scattered:

- Product specs in your PIM

- Customer insights in your Customer MDM

- Market data in your analytics platform

In traditional automation you have a fragile script that queries systems A, B and C in order. If one schema changes, it breaks.

But an MCP-enabled agent:

- Discovers relevant enterprise data sources.

- Queries only the systems that contain needed info.

- Synthesizes insights into a coherent presentation.

- Adapts dynamically to schema or data changes.

2. Data quality analysis and pattern recognition

Your data team suspects quality issues in supplier data.

With a traditional approach, predefined scripts check static validation rules.

But an MCP-enabled agent:

- Discovers available data domains and validation tools.

- Analyzes datasets to find anomalies *you didn’t predefine*.

- Applies business rules dynamically.

- Generates contextual quality reports and remediation suggestions.

The result is an adaptive, intelligent data quality analysis that evolves with your data.

3. Documentation generation

Developers rarely update API documentation consistently.

With a traditional approach updates are done manually (often out of sync).

But an MCP-enabled agent:

- Traverses your live codebase and APIs.

- Detects undocumented endpoints or deprecated routes.

- Generates and updates documentation automatically.

Your documentation now stays in sync with reality and not just intention.

The road ahead

MCP is quickly emerging as the connective tissue between reasoning and real-world action for AI.

As adoption of MCP continues to increase, we anticipate the emergence of open-source MCP servers and software development kits tailored for widely used systems. There will likely be standardised tools designed for building, testing, and securing MCP endpoints.

Furthermore, AI platforms and orchestration tools are expected to offer native support for MCP clients, streamlining integration and expanding capabilities.

MCP with the foundation for intelligent data interaction

MCP doesn’t replace your data infrastructure, it amplifies it. If your organization is already investing in Master Data Management (MDM) and Data-as-a-Service (DaaS) platforms, MCP acts as the connective tissue that lets AI agents use that data intelligently and responsibly.

Your MDM systems already ensure data quality, consistency, and governance across domains. DaaS exposes that trusted data through APIs or cloud services for consumption.

What’s been missing until now is a standardized way for AI models to discover, understand, and interact with those services autonomously. That’s exactly where MCP fits.

By layering MCP on top of MDM and DaaS, you turn static, API-driven access into a dynamic, context-aware interaction model:

- Agents can discover which master data entities or DaaS endpoints are available.

- MCP schemas provide semantic context, so models understand what the data represents.

- Built-in permission and policy layers ensure data is accessed in compliance with enterprise governance.

The result is an ecosystem where your AI systems can not only retrieve data, but reason about its meaning, lineage, and usage within enterprise policies.

In essence, MCP operationalizes your data strategy for the AI era. MDM ensures data is clean and consistent, DaaS makes it accessible and MCP makes it usable by autonomous systems.

Together, they enable a new level of data intelligence where AI agents can safely interact with the full spectrum of enterprise knowledge, driving innovation and automation at scale. MCP isn’t just an integration layer, it’s the foundation for building AI systems that act with context, compliance, and confidence.