If you've been running your organization’s product information management (PIM) or broader product data management initiatives, you know the environment changes faster than ever. You need to be constantly aware of what’s coming next. What worked two years ago feels outdated. What seemed cutting-edge six months ago has fizzled out.

Right now, five major trends are converging in PIM, including fundamental changes to how organizations handle product data, product content and the customer experience across every digital touchpoint and sales channel. Each trend alone would be significant. Together, they are reshaping the entire discipline and influencing the overall PIM market.

In this article, we’ll discuss what you need to know about the five trends that will define product information management in 2026 and beyond. We’ll break down each one of them into more contextual details, then wrap things up with tips on what you can do to proactively prepare for the digital transformations, technological advancements and evolving market trends of the future.

Key takeaways: 2026 PIM trends

Before we tackle them one by one, here’s a quick look at the top PIM trends shaping 2026 as enterprises adapt to new regulations, evolving technologies and increasing competitive pressures:

- AI capabilities: AI is expanding beyond basic content generation to handle complex data validation, predictive mapping and automated quality control across entire product catalogs.

- Modern architectures: Modern PIM solutions are shifting toward modular, API-first architectures that allow companies to integrate best-of-breed solutions and streamline operations while avoiding vendor lock-in situations.

- Regulatory impact: European Digital Product Passport regulations will require companies to completely rethink how they collect, store and share sustainability data throughout product lifecycles.

- Real-time data: Organizations are moving away from batch processing toward real-time data flows that keep pricing, inventory and product information synchronized across all systems and channels.

- Advanced analytics: Data analytics capabilities are becoming sophisticated enough to predict customer behavior, optimize content performance and guide strategic product decisions based on actual usage patterns.

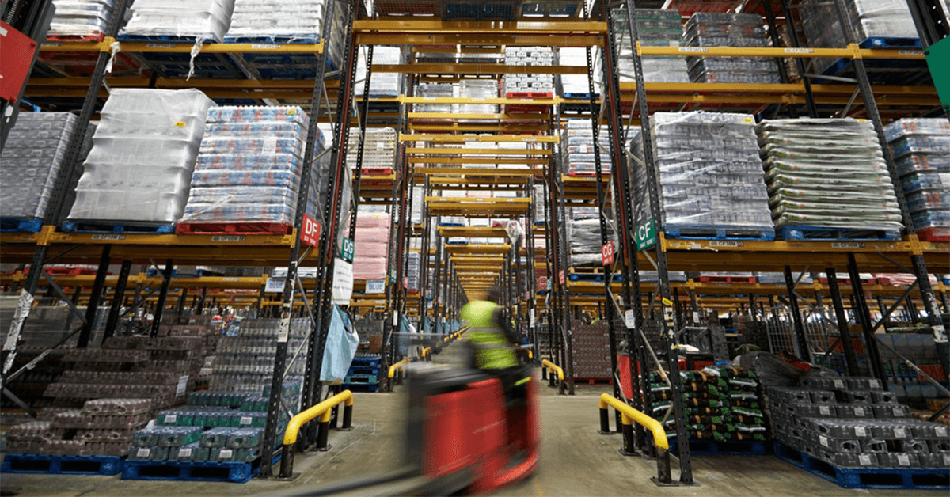

Better product experiences. And better conversions.

Discover how you can connect and unify product data for a consistent, seamless product experience.

1. Gen AI is going far beyond simple content creation

Most organizations think Gen AI in PIM means writing product descriptions or working within content creation, but they’re missing the bigger picture.

There are so many opportunities waiting just beyond the most obvious use cases, especially in areas where humans struggle with scale and consistency. In fact, artificial intelligence is transforming the entire product data management process and increasing operational efficiency.

Data quality validation

With data quality validation, instead of writing rules to catch every possible data anomaly, AI-driven models can learn your data patterns and flag outliers automatically. They can spot inconsistencies that rule-based systems miss – like product weights that seem reasonable individually but are inconsistent within a complex product category, making for better data accuracy.

Predictive attribute-mapping at scale

When you have thousands of products across multiple hierarchies, mapping attributes manually becomes a bottleneck. AI can analyze existing mappings, understand the relationships between product types and attributes, and then suggest mappings for new products. It learns from your taxonomy decisions and gets more accurate over time.

Intelligent taxonomy management

Your product categories evolve constantly. New products do not fit existing categories, and seasonal items need temporary classifications. AI can analyze product attributes and descriptions to suggest where new products belong in your taxonomy, helping you categorize more effectively. It can also identify when your category structure needs updating based on emerging market trends or within specific segments.

Channel-specific content optimization

Dynamic content optimization takes the above even further. AI analyzes how different product descriptions perform across channels.

- What works on your website?

- What works on Amazon?

- What works through your retail partners?

AI will automatically adjust content for each channel based on performance data, not just channel requirements. This can help you control product recommendations and improve product experience management (PXM).

Translation and localization at enterprise scale

There are serious scale advantages to be had. Take the automating of millions of product translations. We’re not just talking about efficiency here — we are transforming from a manual process that may have taken years to something that happens continuously. Cloud-based PIM solutions enable this at a global scale.

In general, AI is at its best when it handles the complex, repetitive tasks that exhaust our mere human analysts. It frees them up to focus on strategic decisions about data architecture, digital commerce and overall business rules.

2. Composable PIM architecture is replacing monolithic systems

Many organizations end up relying on a patchwork of point solutions - one system for product data, another for digital asset management, a third for syndication. Each tool may excel in its specific role, but getting them to work together can be a constant challenge.

Traditional monolithic PIM software only makes this more difficult. They tend to create rigid, fragmented workflows where teams spend more time troubleshooting integrations and maintaining connectors than improving the actual product data strategy. As a result, the PIM itself becomes a bottleneck instead of a foundation for better decision-making and data-driven growth.

How MACH principles are changing the game

Composable architecture flips this model on its head by embracing MACH principles: Microservices, API-first, Cloud-native and Headless. These may sound like tech buzzwords to some, but they represent a fundamental shift in how modern data infrastructure is built.

- Microservices: Provides modular scalability by letting you replace individual components without overhauling the entire system

- API-first design: Instantly integrates specialized best-of-breed tools like visual search or personalization engines for faster innovation

- Cloud-native architecture: Provides the elasticity needed to support your growing product catalog demands and multichannel syndication

- Headless approach: Decouples your data layer from presentation so you can deliver consistent omnichannel product experiences across the board

Reducing vendor lock-in risks

With monolithic systems, switching costs are enormous. You are essentially married to your vendor's roadmap, pricing changes and technical limitations. Composable architecture changes this dynamic completely by swapping out individual components based on performance, cost or new requirements.

If your search provider falls behind, only replace the search component. If you need better analytics, integrate a specialized analytics platform without touching your core data management.

Faster time-to-market for new capabilities

This modularity speeds up innovation. Instead of waiting for your PIM vendor to build new features, you can integrate specialized solutions right away.

If you need advanced image recognition for visual search, plug in a computer vision API. If you want more sophisticated personalization or product recommendations, connect a dedicated personalization engine. The time it takes between identifying a need and deploying a solution drops from months to weeks.

Enterprise scalability advantages

As your product catalog grows, different components face different scaling challenges. Your search functionality might need to handle millions of queries, but your data governance tools serve a smaller user base. With composable architecture, you can scale each component independently. You are not paying for enterprise-level everything when you only need enterprise-level performance in specific areas.

For transitions like these, you need to plan carefully. But the organizations that do make this shift are seeing great improvements in both agility, data enrichment and total cost of ownership.

Crash course

3 Steps to Better Product Experiences

Data management, data syndication and generative AI, all in bite-sized 60-second videos you can watch on the go.

3. Digital Product Passport compliance is changing data architecture

The European Union is not asking nicely. Digital Product Passports (DPP) are coming, and they will completely change how you structure product data.

The timeline is also aggressive. The EU Digital Product Passport requirements roll out between 2026 and 2030, starting with textiles, electronics and batteries.

So, if you sell products in European markets, you need to be ready. Companies are already scrambling to understand what data they need to collect and how to structure it for compliance.

New data modeling requirements:

Your current product data model probably focuses on marketing attributes, pricing and basic specifications. With Digital Product Passports, you need a completely different approach.

You now need to track:

- Material composition down to specific percentages and sourcing locations

- Manufacturing processes, including energy consumption and waste generation

- Transportation data covering every step of the supply chain

- End-of-life instructions for recycling, disposal or refurbishment

- Repair information, including spare parts availability and service manuals

This is especially important for complex products with multiple components or materials. You will need to categorize and segment products to satisfy compliance requirements.

Lifecycle tracking from cradle to grave

DPP requires tracking products across their entire lifecycle. This extends far beyond traditional product information management. You are essentially creating a biography for every product that follows it from raw materials through manufacturing, distribution, use and eventual end-of-life disposal.

The data architecture implications are massive. You need high-quality product management systems that can ingest data from suppliers, manufacturers, logistics providers – even customers. Across this vast ecosystem, you need to ensure data integrity, and of course, all data must be easily accessible for regulators and consumers.

Carbon footprint integration challenges

Carbon accounting is particularly complex. You need to calculate emissions at every stage of the product lifecycle, often relying on data from suppliers who may not have sophisticated tracking systems themselves.

To meet these requirements, you will need:

- New integrations

- New data validation processes

- Advanced algorithms for tracking and verification

- New governance frameworks

How compliance can be a competitive advantage

There is a fundamental opportunity most companies are missing:

Early compliance creates competitive differentiation.

More and more, consumers make purchasing decisions based on sustainability information. Therefore, retailers are starting to prioritize suppliers who can provide high-quality product information and environmental data. If you get ahead of the regulatory requirements, you can position yourself as a leader in sustainability and transparency.

By preparing for DPP now, you can:

- Make better business decisions based on accurate lifecycle and environmental data

- Strengthen user experience across channels

- Strengthen your position in digital commerce markets

This all amounts to smoother market access, better supplier relationships and more consumer trust when the requirements take effect.

4. Enterprise systems are switching to real-time data synchronization

Batch processing is becoming a liability.

Inventory updates run overnight, pricing changes take hours to propagate and product information sits in staging areas waiting for the next sync window. By the time your data reaches all systems, it is already outdated.

The end of batch processing delays

With real-time synchronization, you avoid these delays completely.

- When inventory levels change in your CRM or ERP system, every channel knows immediately.

- When pricing updates, your website, marketplaces and retail partners receive the new information within seconds instead of hours.

To make this shift, you need to rethink your whole data architecture, but the benefits are massive and well worth it.

Live pricing and inventory across all channels

Nothing frustrates customers more than discovering a product is out of stock after they have decided to buy. And nothing damages your relationship with retail partners more than feeding them inaccurate inventory data.

Real-time synchronization and syndication fix both problems:

- Your e-commerce site shows actual availability

- Your marketplace listings reflect current stock levels

- Your B2B partners can make informed decisions based on real-time data

All this leads to fewer stockouts, less overselling and more satisfied customers across all channels.

Event-driven architecture fundamentals

If you decide to move to real-time, you will need an event-driven architecture. So, instead of systems pulling data on scheduled intervals, any change triggers and immediate event that propagates across your whole ecosystem:

- Your e-commerce platform

- Third-party marketplaces

- Retail partner feeds

- Mobile applications

- Print catalog systems

- Cross-functional governance challenges

Real-time data synchronization sounds great (and it is) until you realize the governance implications. When changes propagate instantly, you require:

- Much tighter controls on who can make what changes and when

- Approval workflows that work in real-time

- Rollback capabilities when changes cause problems

- Monitoring systems that can detect and alert on data quality issues before they reach customers

The infrastructure investment you need

This transformation is not cheap. You need robust API infrastructure, solid monitoring systems and failover capabilities to ensure reliability.

But consider the cost of not making this investment.

Every hour of delay in price updates costs money. Every inventory discrepancy damages customer relationships. Every manual reconciliation process consumes resources that could be better spent on strategic initiatives.

If your organization makes this transition, your operations will be far more efficient and your customers more satisfied. So, the question is not whether to make this shift, but how quickly you can execute it.

Discover your product experience maturity level

Discover where your brand stands on the journey to data-driven product experience maturity and gain insights to elevate your brand's impact across every touchpoint.

5. Advanced analytics are enabling product performance optimization

Most product data analytics focus on what happened. What really gives you an edge, though, is predicting what will happen next.

In product information management, that changes fast now. For years, PIM analytics meant dashboards showing data completeness percentages and workflow status updates. You measured how much product information you had, not how well it performed.

But for now, this approach is becoming obsolete as organizations realize their product data contains predictive intelligence just waiting to be unlocked.

Demand forecasting using attribute correlations

Your product attributes have predictive signals you are probably not using:

- Color preferences shift seasonally

- Material choices correlate with geographic regions

- Size distributions follow patterns you can model and predict

With advanced analytics platforms, you can analyze these attribute correlations to forecast demand at granular levels. Instead of predicting that "winter jackets will sell well," you can predict that medium-sized navy wool coats will outperform large black polyester ones in specific markets. That level of granularity completely changes inventory planning and product development decisions.

Channel-specific content performance measurement

You need different content approaches for different channels. What converts on Amazon differs from what works on your own e-commerce site. What drives sales in retail differs from what succeeds in B2B catalogs.

Analytics will be able to tell you:

- Which product descriptions generate the highest conversion rates by channel?

- How do different image sequences affect bounce rates?

- What attribute information correlates with purchase decisions?

Customer journey analytics tied to data quality

Product data quality directly impacts customer behavior throughout the purchase journey. Poor product information increases bounce rates. Missing specifications reduce conversion. Inconsistent descriptions across channels erode trust.

To measure these relationships, you need sophisticated analytics that connect data quality metrics to customer behavior patterns. When you can quantify how a missing product dimension reduces conversions by 12%, data quality transforms from a compliance requirement into a revenue optimization initiative.

Predictive content optimization

The most advanced organizations use predictive analytics to optimize content before it goes live.

Machine learning models analyze historical performance data, seasonal trends and competitive intelligence to recommend optimal content strategies for new products. They predict which keywords will drive traffic, which product features to emphasize and which images will generate the highest engagement. This moves content creation from art to science.

ROI measurement for data initiatives

Traditional PIM metrics focus on data completeness and accuracy. Advanced analytics expand this to business impact measurement.

You can track how improving product data completeness affects:

- Search ranking improvements across channels

- Conversion rate increases by product category

- Customer satisfaction scores and return rates

- Time-to-market for new product launches

When you are armed with this data, your conversations with leadership change completely. Instead of requesting a budget for "better data quality", you can present investments with quantifiable ROI projections.

If you start building these analytical capabilities now, you are also building great competitive advantages as market dynamics continue speeding up and customer expectations continue rising.

How to prepare your PIM strategy for these trends and changes

Recognizing these PIM trends is one thing, but preparing your organization for them is another challenge entirely.

But you don’t need to tackle everything at the same time. The key is understanding where you stand today and building a roadmap that addresses your most critical gaps first.

1. Assess how ready your data is today

Start with an honest evaluation of your existing data architecture. Most organizations find significant blind spots during this process.

Audit your data quality metrics beyond simple completeness scores. Be sure to evaluate:

- Consistency across all omnichannel and multichannel touchpoints

- Accuracy of technical specifications

- Timeliness of product data updates

Map out your current data flows to spot bottlenecks and manual intervention points, and document your governance processes carefully.

- Who can make changes to product data?

- How long does it take for changes to propagate across systems?

- Where do approval workflows create delays?

Once you have done this assessment, you should have a clearer picture of which trends you can address immediately and which ones need foundational improvements first.

2. Evaluate your technology architecture for composable capabilities

Your current PIM system may not support the modular approach you need for composable architecture.

Start by evaluating your API capabilities:

- Can external systems easily integrate with your product data?

- Do you have robust authentication and rate limiting?

- Can you expose data in real time rather than with batch exports?

Then assess your cloud infrastructure readiness. Composable architecture works best with cloud-native deployment models that can scale individual components independently.

The critical question is integration complexity. How difficult would it be to replace individual components of your current system? Where are you most locked into proprietary formats or processes?

3. Assess your readiness for sustainability compliance

To comply with Digital Product Passport requirements, you probably need data you don’t collect today.

Map your supply chain data visibility first. How much information do you have about material sourcing, manufacturing processes and transportation? The gaps between what you know and what regulations will require are usually substantial.

Then evaluate your supplier data collection capabilities:

- Can your suppliers provide the detailed sustainability data you will need?

- Do you have systems to validate and standardize this information?

Consider your long-term data storage requirements. Sustainability data needs to be maintained for the whole product lifecycle, potentially spanning decades.

4. Plan for AI integration and build data preparation strategies

For any generative AI to give you reliable results, you need clean, well-structured data.

So, assess your data standardization across product categories first. Inconsistent attribute names, units of measure or classification schemes will limit AI effectiveness.

Then, start standardizing the data sets you plan to use for AI applications before you move forward.

Content quality matters more than you might expect. AI models trained on poor-quality product descriptions will generate poor-quality output. So, clean up your foundational content before implementing AI-powered automation.

When it is time for pilot projects, you need to plan those carefully. Some words of advice:

- Start with use cases that deliver immediate value without requiring perfect data

- Data quality validation and attribute mapping are often good starting points

- Build confidence with smaller wins before tackling complex implementations

5. Create a roadmap for change management and team capability development

As you may have experienced, technology changes are often easier than organizational changes.

Your team will need new skills for these emerging capabilities:

- Data analysts need to understand AI model performance

- Content creators need to work with predictive optimization tools

- IT teams need to manage composable architectures

Here are some ways to begin transforming your workflows:

- Start training programs now. The learning curve for these technologies is significant, so if you wait until implementation begins, you are risking your timeline.

- Plan for new roles and responsibilities. Someone needs to own AI model performance. Someone needs to manage sustainability data compliance. Someone needs to orchestrate data flows across composable systems.

- Build cross-functional collaboration frameworks. These trends break down traditional silos between IT, marketing, operations and compliance teams. You need new processes that enable you to make integrated data-driven decisions.

The sooner you start and invest in preparations, the smoother the transitions will be. It means you don’t need to rush implementations or deal with extra organizational resistance to change.

But you may be asking yourself: how can this be accomplished without a strong data foundation? That’s where master data management can be a game changer.

Solution sheet

Product Experience Data Cloud Solution Sheet

This solution sheet gives you a quick overview of how we support the full product data lifecycle.

How Stibo Systems master data management creates the right conditions to benefit from all these trends

Understanding these PIM trends is valuable, but having the technology infrastructure to execute on them is what gives you competitive advantage.

At Stibo Systems, we have been building next-level PIM capabilities specifically designed for this new era of product information management with our Product Experience Data Cloud (PXDC).

What makes PXDC different

Conventional PIM platforms focus on storing and managing basic product data.

PXDC transforms product information into rich, dynamic experiences that drive customer engagement and business growth. It sits on our multidomain platform, letting you manage product data alongside customer, supplier and location information in a unified system.

Instead of retrofitting legacy systems, you get a complete platform purpose-built for AI integration, composable architecture, regulatory compliance and a lot more.

AI-powered automation across the entire product data lifecycle

We integrate Gen AI capabilities directly into core PIM workflows within PXDC.

Our AI-powered data quality validation happens automatically as your products move through your system, learning your data patterns and flagging anomalies that rule-based systems miss.

Our Enhanced Content and AI-Generated Content services work continuously in the background analyzing performance across channels and adjusting product descriptions based on real conversion data. This helps translation and localization happen at enterprise scale without manual bottlenecks.

Composable architecture supporting MACH principles

PXDC follows MACH architecture principles from the ground up:

- API-first development makes every function accessible through robust interfaces

- Microservices architecture lets you scale individual components independently

- Cloud-native deployment provides flexibility without vendor lock-in

You can integrate best-of-breed solutions for search, analytics or personalization. And you do it without complex customization with our extensive syndication and integration capabilities.

Built-in sustainability compliance and Digital Product Passport readiness

We've integrated compliance preparation into the core data model of PXDC through our Product Sustainability Data cloud service.

Our platform includes pre-built data structures for sustainability tracking, material composition documentation and lifecycle management.

Carbon footprint calculation tools help you meet reporting requirements, and of course, our system maintains audit trails and documentation standards that regulatory bodies expect.

Real-time synchronization capabilities with enterprise systems

Our PXDC uses event-driven architecture that eliminates batch processing delays.

ERP integration happens in real-time through our multidomain platform, so inventory changes propagate immediately to all channels via our Product Data Syndication service.

We include monitoring and rollback capabilities within PXDC to ensure reliability when changes cause problems.

Advanced analytics through Digital Shelf Analytics

Our Digital Shelf Analytics cloud service connects product data quality directly to business performance metrics.

You can track how data completeness affects conversion rates by channel and measure how data quality improvements translate to revenue gains.

PXDC also comes with ROI measurement tools that transform data quality from a cost center into a revenue optimization initiative. When you can demonstrate that improving product dimensions increases conversions by 12%, funding decisions become much easier.

Is your PIM ready for 2026?

With all these trends to consider, it's safe to say that significant value is up for grabs.

If you are a large organization looking to capitalize on these opportunities, PXDC lays the perfect foundation for you. It removes the complexity of managing multiple vendors. It reduces implementation risk. It accelerates time-to-value.

Prepare your organization’s product data not just for these trends, but for almost any trend the world throws at you. Learn more about Stibo Systems Product Experience Data Cloud (PXDC) and build a strong data foundation for the changes soon to come.

Frequently asked questions

What is the timeline for Digital Product Passport compliance and how should I prepare?

The EU Digital Product Passport requirements begin rolling out in 2026 for specific product categories, with full implementation by 2030.

You need to start auditing your current data collection capabilities, identifying gaps in sustainability tracking and implementing systems that can capture material composition, carbon footprint and lifecycle data from manufacturing through disposal.

How do I know if my current PIM system can handle composable architecture?

If your system offers strong APIs, supports microservices integration and allows modular deployment of new features, you are in good shape.

If your PIM requires significant customization for basic integrations or forces you to use bundled solutions for every function, you are dealing with a monolithic approach that will reduce your data quality and limit your future flexibility.

What specific AI capabilities should I prioritize in my PIM strategy?

Focus on automated data quality validation, predictive attribute mapping across product hierarchies and intelligent taxonomy management first. These foundational AI applications will give you immediate efficiency gains while preparing your data infrastructure for more advanced analytics down the road.

How can I achieve real-time data synchronization between my ERP and PIM systems?

You need an event-driven architecture that triggers instant updates when data changes occur in any system.

This means moving away from those scheduled batch processes toward API-based integrations that handle live pricing updates, inventory changes and product information modifications as they happen.

What ROI should I expect from advanced PIM analytics?

You will typically see improvements in demand-forecasting accuracy, reduced time-to-market for new products and increased conversion rates through optimized content performance.

Your specific ROI will depend on your current data quality and the complexity of your product catalog.

How do I build internal capabilities for these PIM trends?

You need cross-functional teams that combine data management expertise with sustainability knowledge and AI literacy.

This means training your existing staff on composable architecture principles and establishing governance frameworks that can handle real-time data flows across multiple systems.